Manufacturers who use machine learning (ML) in their medical devices or IVDs must comply with numerous regulatory requirements.

This article provides an overview of the most important regulations and best practices for implementation. It saves you the trouble of researching and reading hundreds of pages and helps you prepare perfectly for your next audit.

- AI/ML-specific regulatory and normative requirements are still emerging; they are inconsistent, incomplete, and changing rapidly.

- These requirements vary greatly across different markets.

- Manufacturers should first apply AI/ML-non-specific requirements (e.g., validation) to medical devices with machine learning.

- The guide from the Johner Institute, on which the questionnaire from TeamNB is based, describes the state of the art in Europe and consolidates all regulatory and normative requirements.

- Implementing the requirements requires good communication between regulatory affairs and quality management on the one hand, and data scientists and machine learning experts on the other.

This keyword article on artificial intelligence provides an overview and links to further specialist articles on the topic.

1. Legal requirements for the use of machine learning in medical devices

a) EU Medical Device Law

Obviously, medical devices that use machine learning must also comply with existing regulatory requirements such as the MDR and IVDR, e.g.:

- Manufacturers must demonstrate the benefits and performance of the medical devices. For example, devices used for diagnosis require proof of diagnostic sensitivity and specificity.

- The MDR requires manufacturers to ensure the safety of devices. This includes ensuring that software is designed to ensure repeatability, reliability, and performance (see MDR Annex I, 17.1 or IVDR Annex I, 16.1).

- Manufacturers must formulate a precise intended purpose (MDR/IVDR Annex II). They must validate their devices against the intended purpose and stakeholder requirements and verify them against the specifications (including MDR Annex I, 17.2 or IVDR Annex I, 16.2). Manufacturers are also required to describe the methods they use to provide this evidence.

- If the clinical evaluation is based on a comparative product, there must be technical equivalence, which explicitly includes the evaluation of the software algorithms (MDR Annex XIV, Part A, Paragraph 3). This is even more difficult in the case of performance evaluation of in vitro diagnostic medical devices (IVDs). Only in well-justified cases can a clinical performance study be waived (IVDR Annex XIII, Part A, Paragraph 1.2.3).

- The development of the software that will become part of the device must take into account the “principles of development life cycle, risk management, including information security, verification and validation” (MDR Annex I, 17.2 or IVDR Annex I, 16.2).

There are currently no laws or harmonized standards that specifically regulate the use of machine learning in medical devices. However, two standards are currently under development:

IEC 62366-3 on the application of usability engineering according to IEC 62366-1 to AI-based medical devices. This standard will probably incorporate the contents of this document, to which the Johner Institute also contributed.

ISO 24971-2 on the application of risk management according to ISO 14971 to AI-based medical devices

b) EU AI Act

The AI Act applies to most medical and IVD medical devices that use artificial intelligence procedures, particularly machine learning. If a notified body is to be involved in the conformity assessment for these devices, i.e., if the devices do not fall into the lowest class, then they are even considered “high-risk devices” under the AI Act.

A detailed article on the AI Act presents the requirements of this EU regulation, describes the impact on manufacturers, and provides tips for implementation.

The EU intends to revise its digital regulations as part of a “digital omnibus.” This also includes AI regulation. However, no concrete plans are known at this time.

2. Legal requirements for the use of machine learning in medical devices in the USA

a) Unspecific requirements

The FDA has many requirements that are not specific to machine learning products but are nevertheless relevant:

- Cybersecurity Guidance

- 21 CFR part 820 (including part 820.30 with design controls)

- Software Validation Guidance

- Off-the-shelf software (OTSS) Guidance

Executive Order 14110 was overturned by the Trump administration on January 25, 2025.

The (voluntary) AI Risk Management Framework of the NIST remains in place, including the Generative AI Profile NIST AI 600-1.

b) Specific requirements

Frameworks

In April 2019, the FDA published the draft Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD). In it, it introduces the concept of “Predetermined Change Control Plans.”

Manufacturers can describe planned changes in such a PCCP and have them approved when the product is approved. The agency then does not expect a new submission when these changes are implemented.

Examples of changes planned in a PCCP

- Improvement of clinical and analytical performance: This could be achieved by training with more data sets.

- Change in the “input data” processed by the algorithm. This could be additional laboratory data or data from another CT manufacturer.

- Change in intended use: As an example, the FDA cites that the algorithm initially only calculates a “confidence score” to support the diagnosis and later calculates the diagnosis directly. A change in the intended patient population also counts as a change in intended use.

This approach requires several prerequisites, which the FDA refers to as pillars:

- Quality management system and “Good Machine Learning Practices” (GMLP)

- Planning and initial assessment of safety and performance

- Approach to evaluating changes after initial approval

- Transparency and monitoring of performance in the market

The FDA has now specified these requirements in its document “Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD)”.

Guidance Documents

In April 2023, the agency incorporated this framework into the guidance document “Marketing Submission Recommendations for a Predetermined Change Control Plan for Artificial Intelligence/Machine Learning (AI/ML)-Enabled Device Software Functions”.

The FDA revised the guidance on predetermined change control plans for AI-based products from December 2024 again in August 2025. However, the changes are minor. The agency has changed the definition of machine learning, however.

Another FDA guidance document on radiological imaging does not directly address AI-based medical devices, but it is still helpful. On the one hand, many AI/ML-based medical devices work with radiological image data, and on the other hand, the document identifies sources of error that are particularly relevant for ML-based products:

- Patient characteristics

- Demographic and physiological characteristics, motion artifacts, implants, spatially heterogeneous distribution of tissue, calcifications, etc.

- Imaging characteristics

- Positioning, specific properties of medical devices, imaging parameters (e.g., sequences in MRI or X-ray doses in CT), algorithms for image reconstruction, external sources of interference, etc.

- Image processing

- Filtering, different software versions, manual selection and segmentation of areas, curve fitting, etc.

In January 2026, the FDA published a draft of the Guidance Document “Artificial Intelligence-Enabled Device Software-Function: Lifecycle Management and Marketing Submission Recommendations”. In it, the FDA describes its expectations regarding:

- Quality management

- Product description (e.g., fact that AI is used and for what purpose; extended intended use with requirements for users, clinical context, etc.; including a description of the UI elements)

- User interface (detailed description of how users interact with the product and AI)

- Labeling (focus on transparency regarding the use of AI, its limitations, etc. Also expected inputs, explanation of outputs, the model, and performance)

- Risk management (little AI-specific)

- Data management (explanation of how data is collected, processed, annotated, used for training, etc. Also statement on patient collectives and biases)

- Model description and model development

- Validation (primarily references to usability, also some notes on validating the models themselves, such as testing performance metrics in patient subgroups; also specifications for “study design”)

- Monitoring of the product on the market

- Cybersecurity (including AI-specific threats such as data poisoning, data leakage, etc. The connection between cyberattacks on the one hand and overfitting, model bias, and performance drift on the other is not entirely clear).

This document also sets out the documentation requirements that the FDA expects to receive with a submission.

Further best practices

The FDA, Health Canada, and the UK MHRA (Medicines and Healthcare Products Regulatory Agency) have collaborated to publish Good Machine Learning Practice for Medical Device Development: Guiding Principles. The document contains ten guiding principles that should be observed when using machine learning in medical devices. Due to its brevity of only two pages, the document does not go into detail, but it does summarize the most important principles.

Addendum

The updated “Guiding Principles” for medical devices that use machine learning methods are valuable. These were developed by the FDA in collaboration with Health Canada. This results in two lists:

- Good Manufacturing Learning Practices: Guiding Principles

- Transparency for Machine Learning-Enabled Medical Devices: Guiding Principles

The second and new document is the more specific one, which elaborates on principles 7 and 9 from the first document.

c) Requirements of individual states

AI regulation in the US is not uniform. Individual states have their own requirements:

California:

- Chatbot Act (Bill 243)

- Transparency in Frontier Artificial Intelligence Act (TFAIA)

- California Senate Bill 53 (also reporting requirement)

Colorado: Artificial Intelligence Act (primarily AI consumer protection)

3. Legal requirements for the use of machine learning for medical devices in other countries

a) China: NMPA

The Chinese NMPA has released the draft “Technical Guiding Principles of Real-World Data for Clinical Evaluation of Medical Devices” for comment.

However, this document is currently only available in Chinese. We have had the table of contents translated automatically. The document addresses:

- Requirements analysis

- Data collection and reprocessing

- Design of the model

- Verification and validation (including clinical validation)

- Post-Market Surveillance

Download: China-NMPA-AI-Medical-Device

The authority is expanding its staff and has established an AI Medical Device Standardization Unit. This unit is responsible for standardizing terminologies, technologies, and processes for development and quality assurance.

Since September 1, 2025, there have also been requirements regarding the content of generated AI and labeling, as well as registration obligations. The focus is on the content(!) of generated AI.

b) Japan

The Japanese Ministry of Health, Labor, and Welfare is also working on AI standards. Unfortunately, the authority publishes the progress of these efforts only in Japanese. (Translation programs are helpful, however.) Concrete output is still pending.

c) Other countries

Switzerland: On February 12, 2025, the Federal Council signed the Council of Europe Framework Convention on Artificial Intelligence (CAI).

Canada: Here, the Artificial Intelligence and Data Act (AIDA) should be noted as part of Bill C-27, which is still under development.

Australia: The Voluntary AI Safety Standards are already available in a second version.

4. Standards and best practices relevant for machine learning

a) Best practices

The following table provides an overview:

| Publisher | Title | Contents | Rating |

| COICR | Artificial Intelligence in Healthcare | No specific new requirements; refers to existing standards and recommends the development of standards | Not very helpful |

| Xavier University | Perspectives and Good Practices for AI and Continuously Learning Systems in Healthcare. | This (also) concerns continuously learning systems. Nevertheless, many of the best practices mentioned can also be applied to non-continuously learning systems. | Particularly helpful for continuously learning systems. The Johner Institute’s guidelines are taken into account. |

| The International Software Testing Qualification Board | Certified Tester AI Testing (CT-AI) Syllabus | ISTQB provides a syllabus for testing AI systems, which is available for download. | Recommended |

| ANSI in collaboration with CSA (Consumer Technology Association) | Definitions and Characteristics of Artificial Intelligence (ANSI/CTA-2089) Definitions/Characteristics of Artificial Intelligence in Health Care (ANSI/CTA-2089.1) | Definitions | Only useful as a collection of definitions |

| WHO / ITU | Good practices for health applications of machine learning: Considerations for manufacturers and regulators | The Johner Institute has provided significant input for this AI4H initiative. Coordination of these results with the IMDRF is planned. | Recommended, but already taken into account in the Johner Institute’s guidelines |

| TeamNB and IG-NB | IG_NB questionnaire TeamNB questionnaire | Both are based on the Johner Institute guidelines. | “Must read” as used by notified bodies |

| IMDRF | Good machine learning practice for medical device development – Guiding Principles |

b) Standards

Individual standards

| Title | Contents | Evaluation |

| IEC/TR 60601-4-1 | Requirements for “Medical electrical equipment and medical electrical systems with a degree of autonomy.” However, these requirements are not specific to medical devices that use machine learning methods. | Conditionally helpful |

| SPEC 92001-1 “Artificial intelligence – Life cycle processes and quality requirements – Part 1: Quality meta-model” | Presents a meta-model, but does not specify any concrete requirements for the development of AI/ML systems. The document is unspecific and not geared toward any particular industry. | Not very helpful |

| SPEC 92001-2 “Artificial intelligence – Life cycle processes and quality requirements – Part 2: Robustness” | Unlike the first part, this part contains specific requirements. These are primarily aimed at risk management. However, they are not specific to medical devices. | |

| ISO/IEC CD TR 29119-11 | We have read and evaluated this standard for you. | Ignore |

| ISO 24028 – Overview of Trustworthiness in AI | Not specific to a particular domain, but also cites examples from the healthcare sector. No concrete recommendations and no specific requirements. Mind map with chapter overview | Conditionally recommended |

| ISO 23053 – Framework for AI using Machine Learning | Guidelines for a development process for ML models. Does not contain any specific requirements, but represents the state of the art. | Conditionally recommended |

| ISO/IEC 25059:2023Software engineering — Systems and software Quality Requirements and Evaluation (SQuaRE) — Quality model for AI systems | Not yet analyzed | |

| ISO/DTS 24971-2 Medical devices — Guidance on the application of ISO 14971 Part 2: Machine learning in artificial intelligence | Available as a draft. Specific focus: “Machine learning in artificial intelligence” (sic!). Once again, Pat Baird is in charge, as with BS 34971, a guideline on the same topic. | Recommended in particular as a checklist, even if concepts are inconsistent |

| BS/AAMI 34971 | Comparable to ISO/TS 24971-2 Mind map with chapter structure | Better to read ISO/TS 24971-2; very expensive |

| BS ISO/IEC 23894:2023 “Information technology. Artificial intelligence. Guidance on risk management.” | No duplication of BS 39471, as this standard is not specific to medical devices. | ignore |

| BS 30440:2023 “Validation framework for the use of artificial intelligence (AI) within healthcare. Specification.” | This standard is intended not only for manufacturers, but also for operators, health insurance companies, and users. | |

| ISO/IEC 42001:2023 “Information technology – Artificial intelligence– Management system.” | Please refer to this technical article on ISO/IEC 42001, which provides an overview of the standard and its requirements as well as specific practical tips for implementation. | Mandatory in some markets |

| ISO/IEC 5338:2023 Information technology — Artificial intelligence — AI system life cycle processes | Describes a total of 33 processes; no reference to medical devices. Strong focus on software development; consistently references the software standards ISO/IEC/IEEE12207 and ISO/IEC/IEEE 15288 | Recommended with reservations |

| ISO/IEC TS 4213:2022 Information technology — Artificial intelligence — Assessment of machine learning classification performance | The standard lists statistical methods depending on the task of the model. For binary classifications, it mentions, among other things, the 4-field table (“confusion matrix”), the F1 score, and the “lift curve.” For “multi-label classification,” it mentions “Hamming loss,” “Jaccard index,” and others. | The methods are described so briefly that the value of the standard lies more in compiling the appropriate methods for performance measurement for the model’s task. The gain in knowledge for experienced data scientists and statisticians is therefore unclear. Part of the value is provided by the table of contents of ISO/IEC 4214:2022. |

The video training courses in Auditgarant introduce important methods such as LRP LIME, the visualization of neural layer activation, and counterfactuals.

IEEE standards

A whole family of standards is currently being developed by the IEEE:

- P7001 – Transparency of Autonomous Systems

- P7002 – Data Privacy Process

- P7003 – Algorithmic Bias Considerations

- P7009 – Standard for Fail-Safe Design of Autonomous and Semi-Autonomous Systems

- P7010 – Wellbeing Metrics Standard for Ethical Artificial Intelligence and Autonomous Systems

- P7011 – Standard for the Process of Identifying and Rating the Trustworthiness of News Sources

- P7014 – Standard for Ethical Considerations in Emulated Empathy in Autonomous and Intelligent Systems

- 1 – Standard for Human Augmentation: Taxonomy and Definitions

- 2 – Standard for Human Augmentation: Privacy and Security

- 3 – Standard for Human Augmentation: Identity

- 4 – Standard for Human Augmentation: Methodologies and Processes for Ethical Considerations

- P2801 – Recommended Practice for the Quality Management of Datasets for Medical Artificial Intelligence

- P2802 – Standard for the Performance and Safety Evaluation of Artificial Intelligence Based Medical Device: Terminology

- P2817 – Guide for Verification of Autonomous Systems

- 1.3 – Standard for the Deep Learning-Based Assessment of Visual Experience Based on Human Factors

- 1 – Guide for Architectural Framework and Application of Federated Machine Learning

ISO standards currently under development

Several working groups at ISO are also working on AI/ML-specific standards:

- ISO 20546 – Big Data – Overview and Vocabulary

- ISO 20547-1 – Big data reference architecture – Part 1: Framework and application process

- ISO 20547-2 – Big Data reference architecture – Part 2: Use cases and derived requirements

- ISO 20547-3 – Big Data reference architecture – Part 3: Reference architecture

- ISO 20547-5 – Big Data reference architecture – Part 5: Standards roadmap

- ISO 22989 – AI Concepts and Terminology

- ISO 24027 – Bias in AI systems and AI aided decision making

- ISO 24029-1 – Assessment of the robustness of neural networks – Part 1 Overview

- ISO 24029-2 – Formal methods methodology

- ISO 24030 – Use cases and application

- ISO 24368 – Overview of ethical and societal concerns

- ISO 24372 – Overview of computation approaches for AI systems

- ISO 24668 – Process management framework for big data analytics

- ISO 38507 – Governance implications of the use of AI by organizations.

ISO/IEC 5259 family of standards

An entire family of standards also deals with “Artificial intelligence — Data quality for analytics and machine learning (ML)”.

| Standard | Title |

| ISO/IEC 5259-1 | Part 1: Overview, terminology, and examples |

| ISO/IEC 5259-2 | Part 2: Data quality measures |

| ISO/IEC 5259-3 | Part 3: Data quality management requirements and guidelines |

| ISO/IEC 5259-4 | Part 4: Data quality process framework |

| ISO/IEC 5259-5 | Part 5: Data quality governance framework |

5. Tips for fulfilling the legal requirements

Tip 1: Use interpretability

General

Auditors should no longer be satisfied with the sweeping statement that machine learning procedures represent black boxes.

“There are promising approaches in the current research literature for checking the plausibility of deep learning model predictions. For example, when classifying images, it is possible to see which input pixels are crucial for the classification (see below).

However, no standard methods have yet been established, as the current models and algorithms have different strengths and weaknesses, and the current status quo is in a heuristic phase. Nevertheless, it can be assumed that research in this area will progress further towards explainability in the next few years.”

Many approaches currently “only” focus on interpreting specific individual predictions based on the input data (local interpretabillty).

Example

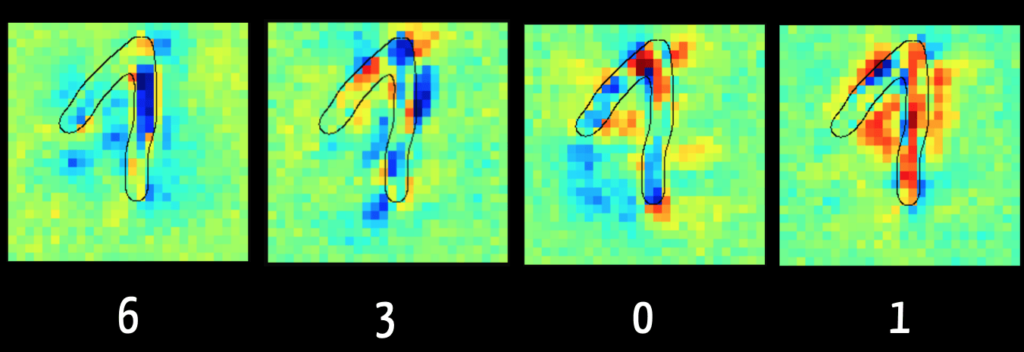

With Layer Wise Relevance Propagation, it is possible to see for some models which input data (“feature”) was crucial for the algorithm for classification, for example.

The left-hand image in Fig. 5 shows that the algorithm can exclude the digit “6” mainly because of the dark blue marked pixels. This makes sense because in the case of a “6,” this area typically has no pixels. By contrast, the right-hand image shows the pixels reinforcing the algorithm’s assumption that the digit is a “1”.

The algorithm tends to view the pixels in the ascending flank of the digit as detrimental to a classification as “1”. It was trained on images in which the “1” is written only as a vertical line, as in the USA. This illustrates how important it is for the result of the training data to be representative of the data to be classified later.

The book “Interpretable Machine Learning” by Christoph Molnar, available online free of charge, is particularly worth reading.

Tip 2: Regularly determine the state of the art

Manufacturers are well-advised not to give a blanket answer when auditors ask about the state of the art but to distinguish between:

- Technical implementation: Relevant standards such as those mentioned here help to prove that the development and verification or validation of the software and models meets current best practices.

- Performance parameters: Manufacturers should compare performance using classic techniques / algorithms and other machine learning models and algorithms. This comparison should be based on all relevant attributes, such as sensitivity, specificity, robustness, performance, repeatability, interpretability, and acceptance.

Tip 3: Work with the AI guideline

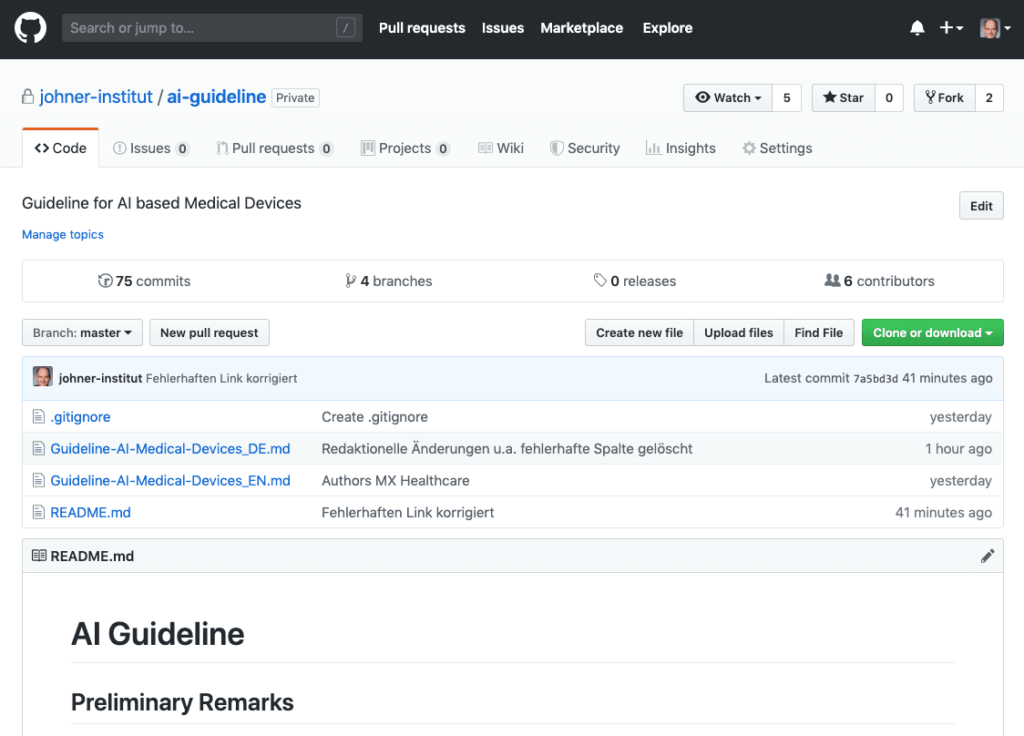

The guideline for applying artificial intelligence (AI) in medical devices is now available for free on GitHub.

We developed this guideline together with notified bodies, manufacturers, and AI experts.

- It helps manufacturers of AI-based devices develop them in compliance with the law and bring them to market quickly and safely.

- Internal and external auditors and notified bodies use the guideline to verify the legal compliance of AI-based medical devices and the associated life cycle process.

Use the Excel version of the guideline, which is available here free of charge. This version allows you to filter the requirements of the guideline, transfer them to your standard operating procedures, and adapt them to your specific situation.

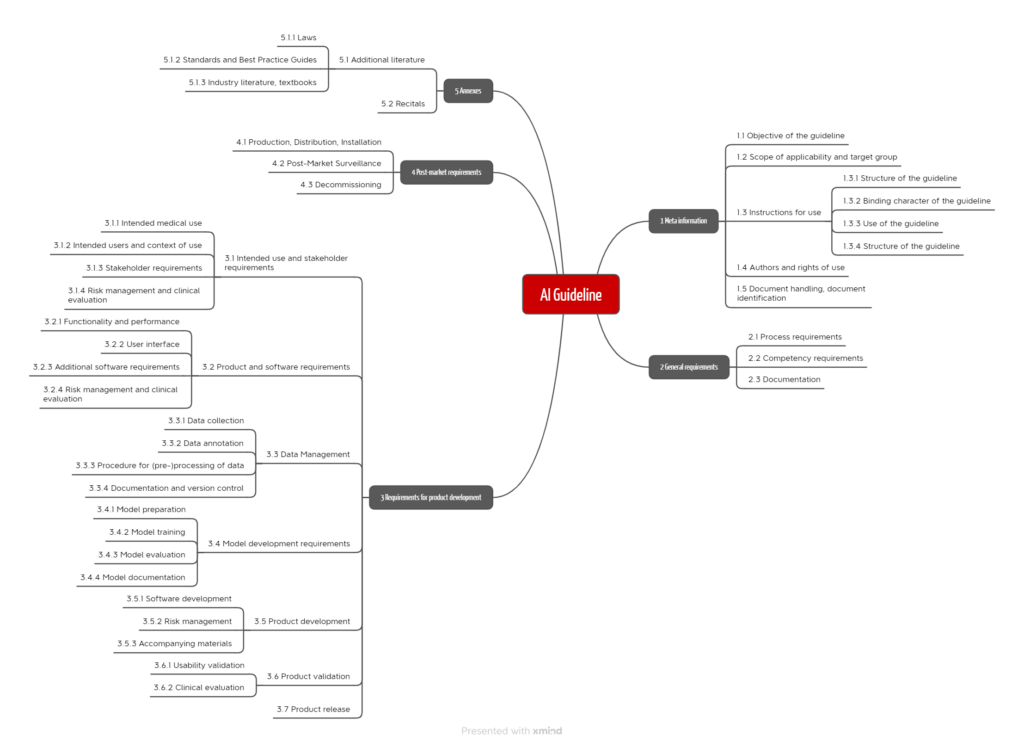

When writing it, it was important to us to provide manufacturers and notified bodies with precise test criteria that allow for a clear and unambiguous assessment. Furthermore, the process approach is in the foreground. The requirements of the guideline are grouped along these processes (see Fig. 7).

Tip 4: Prepare for typical audit questions

General

Notified bodies and authorities have not yet agreed on a uniform approach and common requirements for medical devices with machine learning.

As a result, manufacturers regularly struggle to prove that the requirements placed on the device are met, for example, in terms of accuracy, correctness, and robustness.

Dr. Rich Caruana, one of Microsoft’s leaders in Artificial Intelligence, even advised against using a neural network he developed himself to suggest the appropriate therapy for patients with pneumonia:

„I said no. I said we don’t understand what it does inside. I said I was afraid.”

Dr. Rich Caruana, Microsoft

That there are machines that a user does not understand is not new. You can use a PCR without understanding it; there are definitely people who know how this device works and its inner workings. However, with Artificial Intelligence, that is no longer a given.

Key questions

Questions auditors should ask manufacturers of machine learning devices include:

| Key question | Background |

| Why do you think your device is state of the art? | Classic introductory question. Here you should address technical and medical aspects. |

| How do you come to believe that your training data has no bias? | Otherwise, the outputs would be incorrect or correct only under certain conditions. |

| How did you avoid overfitting your model? | Otherwise, the algorithm would correctly predict only the data it was trained with. |

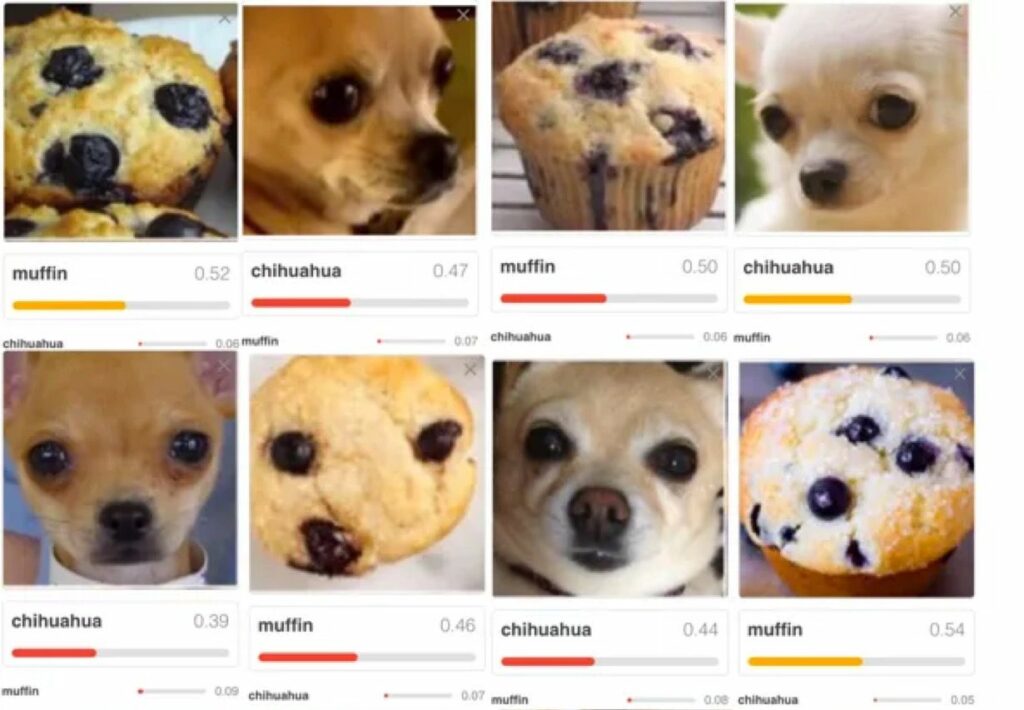

| What leads you to believe that the outputs are not just randomly correct? | For example, it could be that an algorithm correctly decides that a house can be recognized in an image. However, the algorithm did not recognize a house but the sky. Another example is shown in Fig. 5. |

| What conditions must data meet in order for your system to classify them correctly or predict the outputs correctly? Which boundary conditions must be met? | Because the model has been trained with a specific set of data, it can only make correct predictions for data that come from the same population. |

| Wouldn’t you have gotten a better output with a different model or with different hyperparameters? | Manufacturers must minimize risks as far as possible. This includes risks from incorrect predictions of suboptimal models. |

| Why do you assume that you have used enough training data? | Collecting, reprocessing, and “labeling” training data is costly. The larger the amount of data with which a model is trained, the more powerful it can be. |

| Which standard did you use when labeling the training data? Why do you consider the chosen standard as gold standard? | Especially when the machine begins to outperform humans, it becomes difficult to determine whether a doctor, a group of “normal” doctors, or the world’s best experts in a specialty are the reference. |

| How can you ensure reproducibility as your system continues to learn? | Especially in Continuously Learning Systems (CLS) it has to be ensured that the performance does at least not decrease due to further training. |

| Have you validated systems that you use to collect, prepare, and analyze data, as well as train and validate your models? | An essential part of the work consists of collecting and reprocessing the training data and training the model with it. The software required for this is not part of the medical device. However, it is subject to the requirements for Computerized Systems Validation. |

The above mentioned issues are typically also discussed in the context of risk management according to ISO 14971 and clinical evaluation according to MEDDEV 2.7.1 revision 4 (or performance evaluation of IVDs).

The article on artificial intelligence in medicine provides information on how manufacturers can comply with regulatory requirements for medical devices with machine learning. Read also the article on how to avoid clinical studies for devices with artificial intelligence.

Tip 5: Get support

The Johner Institute supports manufacturers of medical devices that use artificial intelligence to

- develop and market their devices in compliance with the law,

- plan and carry out verification and validation activities,

- evaluate their devices in terms of benefit, performance, and safety,

- assess the suitability of procedures (in particular models) and training data,

- comply with regulatory requirements in the post-market phase, and

- create customized standard operating procedures.

Here, you will find a more complete overview.

Tip 6: Avoid typical mistakes made by AI startups

Many startups that use Artificial Intelligence (AI) procedures, especially machine learning, begin product development with the data. In the process, they often make the same mistakes:

| Mistake | Consequences |

| The software and the processes for collecting and reprocessing the training data have not been validated. Regulatory requirements are known in rudimentary form at best. | In the worst case, the data and models cannot be used. This throws the whole development back to the beginning. |

| The manufacturers do not derive the declared performance of the devices from the intended use and the state of the art but from the performance of the models. | The devices fail clinical evaluation. |

| People whose real passion is data science or medicine try their hand at enterprise development. | The devices never make it to market or don’t meet the real need. |

| The business model remains too vague for too long. | Investors hold back or/and the company dries up financially and fails. |

Startups can contact us. In a few hours, we can help avoid these fatal mistakes.

6. Summary and conclusion

a) Regulatory requirements

The regulatory requirements are clear. However, it remains unclear to manufacturers, and in some cases also to authorities and notified bodies, how these are to be interpreted and implemented in concrete terms for medical devices that use machine learning procedures.

b) Too many and only conditionally helpful best practice guides

As a result, many institutions feel called upon to help with “best practices.” Unfortunately, many of these documents are of limited help:

- Reiterate textbook knowledge about Artificial Intelligence in general and machine learning in particular.

- The guidance documents get bogged down in self-evident facts and banalities.

Anyone who didn’t know before reading these documents that machine learning can lead to misclassification and bias, putting patients at risk or at a disadvantage, should not be developing medical devices. - Many of these documents are limited to listing issues specific to machine learning that manufacturers must address. Best practices on how to minimize these problems are lacking.

- When there are recommendations, they are usually not very specific. They do not provide sufficient guidance for action.

- It is likely to be difficult for manufacturers and authorities to extract truly testable requirements from textual clutter.

Unfortunately, there seems to be no improvement in sight; on the contrary, more and more guidelines are being developed. For example, the OECD recommends the development of AI/ML-specific standards and is currently developing one itself. The same is true for the IEEE, DIN, and many other organizations.

Conclusion:

- There are too many standards to keep track of. And there are continuously more.

- The standards overlap strongly and are predominantly of limited benefit. They do not contain (binary decidable) test criteria.

- They come (too) late.

c) Quality instead of quantity

In machine learning best practices and standards, medical device manufacturers need more quality, not quantity.

Best practices and standards should guide action and set verifiable requirements. The fact that WHO is taking up the Johner Institute’s guidance is cause for cautious optimism.

It would be desirable if the notified bodies, the authorities, and, where appropriate, the MDCG were more actively involved in the (further) development of these standards. This should be done in a transparent manner. We have recently experienced several times what modest outputs are achieved by working in backrooms without (external) quality assurance.

With a collaborative approach, it would be possible to reach a common understanding of how medical devices that use machine learning should be developed and tested. There would only be winners.

Notified bodies and authorities are cordially invited to participate in further developing the guidelines. An e-mail to the Johner Institute is sufficient.

Manufacturers who would like support in the development and approval of ML-based devices (e.g., in the review of technical documentation or in the validation of ML libraries) are welcome to contact us via e-mail or the contact form.

Change history:

2026

- 2026-01-29: Added a longer paragraph on the new FDA guidance in section 2.b).

2025

- 2025-12-27

- Chapter 2. a): Repeal of the Executive Order and notes on NIST requirements added

- Chapter 2. c) “Requirements of individual states” added

- Chapter 3. a) Last sentence supplemented with registration requirements

- Chapter 3. c) “Other countries” added

- 2025-11-28: References to ISO 24971-2 and IEC 62366-1 added in section 1.a)

- 2025-11-20: Reference to “digital omnibus” added in section 1.b)

- 2025-10-22: Extensive changes

- Introduction rewritten

- Key takeaways added

- Reference to keyword article added

- Chapter 1.a): Heading renamed, introductory sentence changed, reference to missing standards formulated as a warning

- Chapter 1.c) (other standards) deleted

- Chapter 2.a) reworded and shortened

- Chapter 4 (standards, best practices) completely rewritten. Updated and significantly shortened

- Tables renumbered

- 2025-09-04: In section 2.c) new FDA guidance on predetermined change control plans mentioned

- 2025-04-14:

- Contents of chapter 4.q) moved to chapter 4.w), since both concern the IMDRF

- Chapter 4.q) completely rewritten. It now covers the ISO/IEC 5259 family of standards

- In chapter 4.r), the reference to the new ISO 24971-2 added

- In chapter 4.x), the announced evaluation of the standard ISO/IEC 5338:2023 added

- Chapter 4. z) on ISO/IEC 4213:2022 added

- 2025-02-22: Chapter 2.b) (AI Act) completely “gutted” and the content moved to the article on the AI Act, where it was restructured and updated; link added in section 4.v) to article on ISO/IEC 42001; sections 4.x) and 4.y) added; link added to TeamNB in section 4.p)

- 2025-01-16: Section 5 newly inserted and structured

2024

- 2024-07-08: New FDA guidelines added to chapter 2.c), IMDRF guideline added to chapter 4.w)

- 2024-03-26: Link to the passed AI Act added

- 2024-01-18: Chapter 4.v) that evaluates ISO 42001 and note on the compromise proposal on the AI Act added

2023

- 2023-11-20: New chapter 4.s) with ISO/NP TS 23918 added

- 2023-11-10: Chapter structure revised, FDA requirements added, new BS standards mentioned

- 2023-10-09: Chapter 2.n) that evaluates ISO 23053 added

- 2023-09-07: Chapter 2.s) that evaluates AAMI 34971:2023-05-30 added

- 2023-07-03: Link to BS/AAMI 34971:2023-05-30 added

- 2023-06-27: Section 1(b)(i): AI act applies to MP/IVD of higher risk classes added

- 2023-06-23: FDA guidance document from April 2023 added

- 2023-06-08: Chapter 4 added

- 2023-02-14: Section 2.r) added

2022 and earlier

- 2022-11-17: Link to new draft of AI Act added

- 2022-10-21: Link to EU updates on AI Act added

- 2022-06-27: In chapter on FDA their guidance document for radiological imaging and the sources of errors mentioned there added

- 2021-11-01: Chapter with FDA’s Guiding Principles added

- 2021-09-10: Link with the statement for the EU added

- 2021-07-31: In section 1.b.ii) in the table, two rows with additional criticisms added, one paragraph each in the line with definitions added

- 2021-07-26: Section 2.n) moved to section 1.b), critique and call to action there added

- 2021-04-27: Section on EU planning on new AI regulation added

Great article! We always appreciate valuable insights like these. Thanks for sharing!