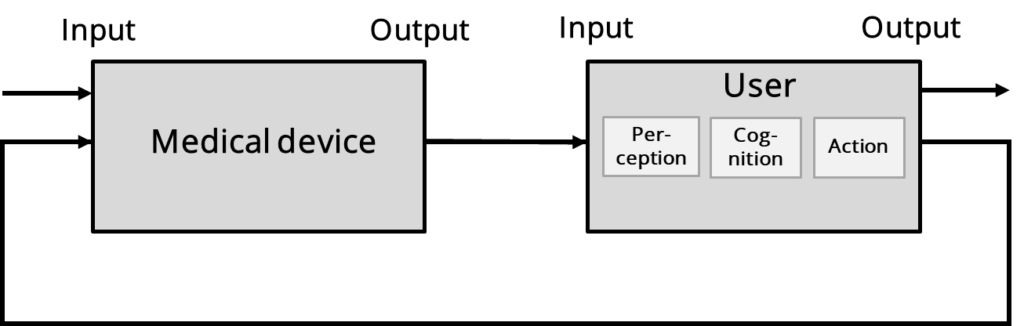

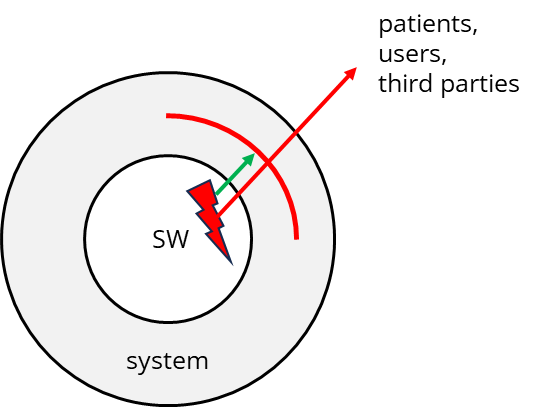

Software risk analysis depends on the following: Software itself cannot cause harm. It always happens via hardware or people. However, this does not mean there is no need for risk analysis in software. The opposite is the case!

What distinguishes risk analysis for software from other medical devices

In the case of (standalone) software, harm can only occur “indirectly,” for example, if

- the software displays something wrong, and therefore, users, e.g., doctors, treat patients wrongly,

- the software displays the correct information, but the user misreads the information (perception) and, therefore, acts in the wrong way,

- the user reads the information correctly but misunderstands it (cognition) and, therefore, acts wrongly or

- the users enter or select the wrong option on the software (e.g., due to lack of usability), which may lead to an incorrect display or other output on the medical device.

Granularity in risk management for software

Risk analysis for software: The right level of granularity

How detailed should the risk analysis be for software?

- Not too granular: Risk analysis down to the individual line of code is likely to be too granular in most cases as it would unnecessarily bloat the risk management files and lead to not only spending an unnecessary amount of time but also preventing from seeing the wood for the trees and overlooking risks.

- Not too coarse-granular: On the other hand, an analysis of only the entire software system is often insufficient to meet the requirements of IEC 62304.

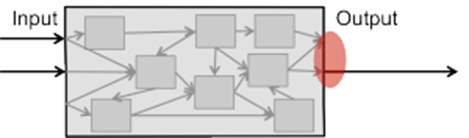

The recommendation would be to analyze the risks of faulty software, at least for the software items at the first building block level. I.e., one should examine all top-level components of the software system in the software architecture to see what can happen if

- the component receives an incorrect input, and

- the component transmits something wrong to the outside via its interfaces. This requires knowing what external interfaces each software item has. To find out how to specify these interfaces and analyze them for risks, please refer to the chapter “Risk analysis for software and software items.”

Software risk control: the right level of granularity

The risk analysis for software will likely identify risks that you, as a manufacturer, must minimize as far as possible. Ideally, you will succeed in implementing these risk-minimizing measures outside the software system.

But you will not always succeed in doing exactly that. I.e., it would help if you had risk-minimizing measures within the software system.

In these cases, it is necessary to identify and manage risks more thoroughly in your software. For example, one or more software items will be connected to a database via an “SQL interface.” But how will you ensure that no erroneous data is stored in the database via this interface?

You will examine each of the components connected to the database to see what the consequences would be if it (the component) receives incorrect data from the database. In exactly these cases, the risk analysis of your software will not be able to be limited to the first component level.

Risk analysis for software and software items

Strictly speaking, you cannot perform any risk analysis at all for software items. However, you can estimate what external misbehavior a software item may have – at least if you have precisely specified the software’s interfaces. A software item can only have the following interface types:

- Interfaces to other software systems or software items: You can describe these interfaces as an API, a WSDL, or a bus specification, for example. You can learn more about this in the interoperability and software requirements articles.

- Interface to the user (UI):Here, you should distinguish two sub-cases as part of the risk analysis:

- The interface does not behave as specified and displays incorrect information: What can happen now? How often will a user cause harm to patients because of this error?

- The interface behaves as specified and displays the correct information – but users misread it, misunderstand it, or act incorrectly because of it. This is exactly why we need usability and IEC 62366. These are the risks that can occur because of a lack of usability.

- Interface to the operating system: Operating systems provide functionalities such as memory management, file access, management of processes and threads, etc. Therefore, as part of your risk analysis for software items, you should investigate what happens if the component does not behave as specified, allocates too much memory, does not get promised memory, cannot access the file system, affects other processes, etc.

Would you like help finding and controlling risks through your software and documenting them in a lean, standard-compliant risk management file? Our team is specialized in this. Get in touch with us!

Risk analysis for software modifications

Changing the (embedded) software of your medical device will affect the inner workings of your device in the form of one or more components. Then, I recommend that you analyze, entirely within the meaning of an FMEA, what would happen if one of these components now had a fault. You trace the chain of causes from the component to the “edge of the device” to the patient.

To do this, you need the software architecture or system architecture for “the inner chain of causes.” Changes to the software architecture can result in components being re-linked to each other or to the inputs and outputs. In the case of new or changed (system) requirements, there may even be new externally visible kinds of behavior.

If there are new “behaviors visible on the outside,” you will also need to reevaluate the external causal chain. This may be more elaborate and require involving context experts and/or clinicians.

If there are no new externally visible behaviors, you must consider whether the change increases or decreases the likelihood that the system will misbehave externally. If so, the risks would change.

Only if no new behaviors or behaviors have changed in the likeliness of being externally visible can you be sure that the risk is unchanged. These considerations are exactly risk analysis.

In a video training in the Medical Device University you will learn how you can “link” the inner and outer chains with the help of tools.

If you now feel that software changes involve quite some work, you have understood this article correctly. Also, keep in mind that both ISO 14971 and the even more specific IEC 62304 have clear requirements for consequence analysis of (software) changes.