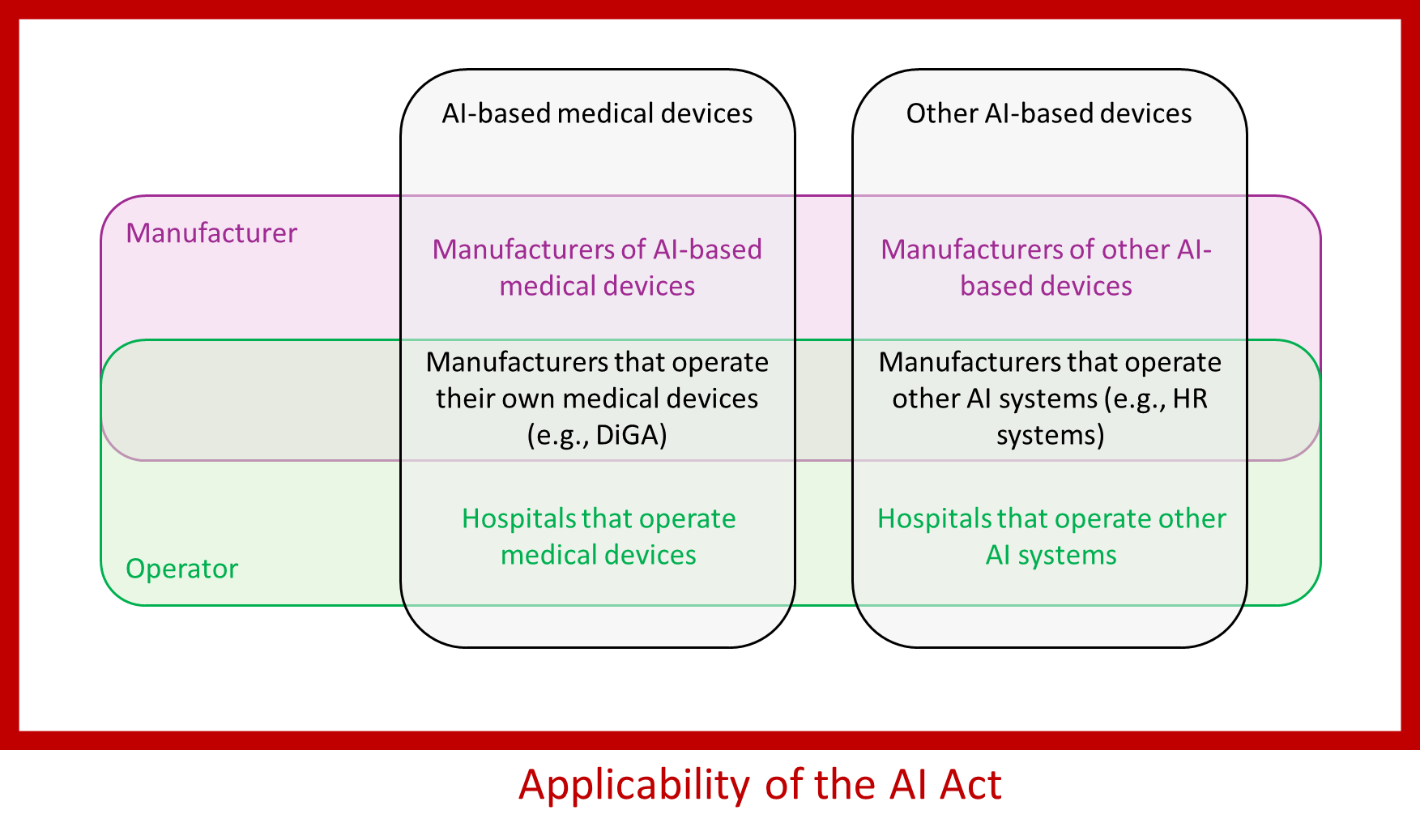

This article examines the AI Act’s applicability to manufacturers of medical devices and IVD that do not place AI-based devices on the market.

Among other things, it answers the question of whether a manufacturer must comply with the AI Act if he uses ChatGPT or develops an AI system that classifies customer feedback for his own use.

This article uses the term “operator” synonymously with the term “deployer” used in the AI Act.

This document does not discuss the case where the operators take on the manufacturer’s obligations in accordance with Article 25 of the AI Act, for example, because they modify the AI system.

Manufacturers of AI-based systems should note the “main article” on the AI Act.

1. Regulatory framework

1.1 Scope

The AI Act defines its scope in Article 2:

This Regulation applies to:

(a) providers placing on the market or putting into service AI systems or placing on the market general-purpose AI models in the Union, irrespective of whether those providers are established or located within the Union or in a third country;

(b) deployers of AI systems that have their place of establishment or are located within the Union;

The regulation also defines the term deployer:

a natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity;

The applicability of the AI Act thus extends to providers (manufacturers) and deployers (operators).

1.2 High-risk AI systems

According to Article 6, high-risk AI systems include devices that contain AI systems that

- are or contain a safety component and thus endanger the health and safety of persons or property,

- are listed in Annex III unless they do not pose a significant risk.

Annex III includes:

- Biometric systems

- Systems for education and training that decide on access to education or are used to assess learning outcomes or level of education

- Systems that decide on the selection, recruitment, promotion, or termination of employment relationships

1.3 Transition periods

Article 111 regulates the transition periods for AI systems already put into service:

(2) Without prejudice to the application of Article 5 as referred to in Article 113(3), point (a), this Regulation shall apply to operators of high-risk AI systems, other than the systems referred to in paragraph 1 of this Article, that have been placed on the market or put into service before 2 August 2026, only if, as from that date, those systems are subject to significant changes in their designs. In any case, the providers and deployers of high-risk AI systems intended to be used by public authorities shall take the necessary steps to comply with the requirements and obligations of this Regulation by 2 August 2030.

2. Requirements

2.1 Requirements for competence

Article 4 of the regulation also requires competence from operators:

Providers and deployers of AI systems shall take measures to ensure, to their best extent, a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf, taking into account their technical knowledge, experience, education and training and the context the AI systems are to be used in, and considering the persons or groups of persons on whom the AI systems are to be used.

The regulation also defines the term “AI literacy”:

skills, knowledge and understanding that allow providers, deployers and affected persons, taking into account their respective rights and obligations in the context of this Regulation, to make an informed deployment of AI systems, as well as to gain awareness about the opportunities and risks of AI and possible harm it can cause;

2.2 Requirements for operators of high-risk AI systems

The AI Act (Article 26) requires the operators of high-risk AI systems to do the following:

- Use the system as intended by the manufacturer

- Ensure the competence of the users

- Monitor the system, including human oversight

- Keep logs (“records”)

- Report problems (manufacturer, authorities) (see also Article 73)

In addition, the operators are obliged to

- carry out a fundamental rights impact assessment (Article 27)

- register the products (Article 49) (but this only applies to products in accordance with Annex III, including education, HR)

- provide information to persons affected by the decision (Article 86) (but this only applies to devices in accordance with Annex III)

2.3 Requirements for operators of “certain AI systems”

Article 50 defines requirements for several types of AI systems:

| Type: AI systems that | Requirement |

| interact directly with natural persons | No operator obligations (providers must design systems in such a way that individuals are informed) |

| serve to recognize emotions | Comply with data protection, among other things |

| serve the manipulation of image, sound, text, or video | Disclose deepfakes or manipulation |

3. Answers

3.1 Almost all-clear

As a first approximation, manufacturers that do not manufacture AI systems but use and operate AI systems are not affected by the AI Act, except for the competence requirements.

For example, the AI Act does not regulate the use (!) of LLMs à la ChatGPT. Also, AI may be used as part of QM processes, e.g., to automate or optimize processes (e.g., chatbots for customer communication) or to check the conformity of devices or processes.

3.2 Exceptions to the all-clear

However, there are exceptions.

- These include high-risk AI systems, particularly the systems referred to in Annex III. For medical device manufacturers, these are likely to be systems in an HR context, especially for (further) training and personnel decisions. Whether the manufacturer has developed these systems himself or uses systems developed by third parties is irrelevant.

- If manufacturers operate their medical devices or IVD themselves, they also count as operators of high-risk systems. An example would be a manufacturer operating the server for a mobile medical app that runs AI algorithms and is a medical device or part of one.

3.3 Note the medical device law

Requirements under medical device legislation (e.g., MDR, IVDR, ISO 13485) apply regardless of this. For example, manufacturers should be aware of the requirements for computerized system validation if they use an AI system as part of QM processes.

The Johner Institute supports manufacturers and operators in achieving compliance with the AI Act. Possible support includes

- an assessment of which regulatory requirements apply to the respective company,

- project plans,

- templates for SOPs, work instructions, and records,

- qualification of the responsible persons, and

- tips for avoiding unnecessary efforts.